Music artist similarities from co-occurrences in playlists

As an exercise for the university I had to calculate music artist similarities based on co-occurrences in user generated playlists. Such similarity measures play a vital role in many application scenarios of Music Information Retrieval (MIR), e.g., in music recommendation and playlist generation applications.

Schedl, 2008 describes music information retrieval (MIR) as the extraction, analysis, and usage of information about any kind of music entity (for example, a song or a music artist) on any representation level (for example, audio signal, symbolic MIDI representation of a piece of music, or name of a music artist).

It’s a quite challenging and repetitive job to calculate and analyze artist similarities as well as make the results visible using data visualization techniques.

Since I’m working on a web-based visualization toolkit, which is dedicated to data analysis and visualization, I just decided to use this specific task of calculating artist similarities as a real-world use-case for the toolkit I’m working on.

The approach described in this post tries to generalize the problem domain and aims to make the calculation, analyzation and visualization of artist similarities a uniform and repeatable process.

Unveil.js

Before I start explaining my approach on the exercise, I want to give you a short introduction on Unveil.js, a data-driven visualization toolkit. It basically provides a simple abstraction layer for visualizations to ease the process of creating re-usable charts. To accomplish this, a data-driven methodology is used.

Data-driven means, that the appearance of the result is determined by the underlying data rather than by user defined plotting options. Visualizations directly access data trough a well defined data interface, a Collection, so there’s no longer a gap between domain data and data used by the visualization engine.

Such visualization can be re-used in terms of putting in arbitrary data in, as long as the data is a valid Collection and satisfies the visualization specification (some visualizations exclusively use numbers as their input, others use dates (e.g. Timeline plots), and so on…).

You can track the project’s progress at Github.

The toolkit’s data layer also provides means to implement arbitrary transformations that operate on raw data. So the challenge was to take input data, a set of user generated playlists, and perform similarity-measurement algorithms on it. The result is a new collection of artists (that occurred in the original data-set) that feature a set of similar artists. This set is based on a similarity score, which is determined by the algorithm.

Data Retrieval

In the first place you are faced with the problem of extracting data from different sources where various methods of information retrieval (MIR) need to be applied. So this task needs to be done mostly by hand and depends on how the data source is organized. The result of this task is usually an intermediate proprietary data format, which is used to perform processing and analysis on the data.

If you would agree on a uniform data exchange format you would be able to generically apply common operations like co-occurrence analysis on it.

Therefore, here’s a proposal of a lightweight Collection format represented as a JSON string that holds the raw data as well as some meta information about the data’s structure.

For my task (analyzation of playlists) I’ve built a simple data aggregation service that pulls user generated playlists from Last.fm and translates them into a uniform collection exchange format.

I’ve used the following strategy to get some suitable playlists:

- Load a set of seed Artists (those relationships you’re finally interested in)

- Collect top fans (users) for all seed artists

- For every user pull its playlists

- The resulting playlists contain only those artists and songs that are listed in the set of seed artists

Using this approach I’m most likely getting playlists that contain artists of the set of seed artists and eliminate the buzz. So once you have this format of data it’s quite easy to operate on it.

Here’s how a resulting collection of playlists looks like:

{

"properties": {

"name": {

"name": "Playlist Name",

"type": "string",

"unique": true

},

"artists": {

"name": "Artists",

"type": "string",

"unique": false

},

"songs": {

"name": "Songs",

"type": "string",

"unique": false

},

"song_count": {

"name": "Song count",

"type": "number",

"unique": false

}

}

"items": {

"4976660": {

"name": "JD_Turk's.Vol.2",

"artists": [

"Beck",

"Michael Jackson",

"Green Day",

"Madonna"

],

"songs": [

"Beck - Think I'm In Love",

"Michael Jackson - Remember the Time",

"Green Day - Last Night On Earth",

"Madonna - Forbidden Love"

],

"song_count": 8

},

"1380177": {

"name": "Random!!!",

"artists": [

"Daft Punk",

"Depeche Mode",

"Moloko",

"Radiohead"

],

"songs": [

"Daft Punk - Something About Us",

"Depeche Mode - Precious",

"Moloko - Cannot Contain This",

"Radiohead - Nude"

],

"song_count": 4

},

}

}

See the full collection here.

You can read more about Collections at the Unveil.js documentation page.

Gaining Similarity Measures

In order to perform similarity measurement on that data, I’ve implemented two different algorithms that calculate a similarity score based on co-occurrences. Those algorithms have been described in detail by Schedl, Knees, 2009.

Below you can see the essential parts of the transformation functions I’ve implemented.

Normalized Co-Occurrences described by Pachet et. al, 2001:

This approach just counts co-occurrences and normalize the result by using a symmetric function.

function coOccurrences(v1, v2) {

var items1 = v1.list('items'),

items2 = v2.list('items');

return items1.intersect(items2).length;

};

function similarity(v1, v2) {

return 0.5* (coOccurrences(v1, v2) / coOccurrences(v1, v1)

+ coOccurrences(v2, v1) / coOccurrences(v2, v2));

};

See the full implementation here.

Weighted co-occurrences on different distances, described by Baccigalupo et. al.:

function coOccurencesAtDistance(v1, v2, d) {

var items1 = v1.list('items'),

items2 = v2.list('items'),

playlists = items1.intersect(items2);

return playlists.select(function(p) {

return checkDistance(p, v1, v2, d);

}).length;

};

function similarity(v1, v2) {

return 1*coOccurencesAtDistance(v1, v2, 0) +

0.8* coOccurencesAtDistance(v1, v2, 1) +

0.64* coOccurencesAtDistance(v1, v2, 2);

};

See the full implementation here

Visualization of the results

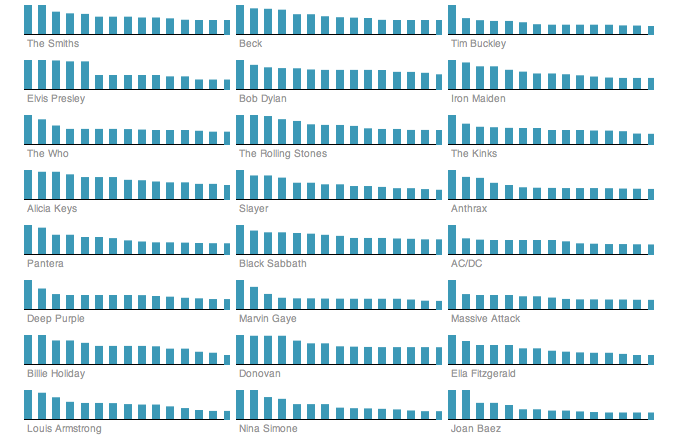

In order to visualize the result I decided to implement an interactive visualization that uses a number of small barcharts (showing similar artists for a certain artist).

Visual representation of the result

Conclusio

The algorithms implemented do not claim to be state-of-the art similarity measurement methods. However, the fact, that you can just swap-in any other implementation makes similarity analysis a repeatable process. In case you’re considering using this framework for your own data analysis tasks, just let me know. I’d be glad to get you started.

References:

- Automatically Extracting, Analyzing, and Visualizing Information on Music Artists (M. Schedl, 2008)

- Context-based Music Similarity Estimation (M. Schedl, P. Knees, 2009)