Music artist similarities from co-occurrences in playlists

As an exercise for the university I had to calculate music artist similarities based on co-occurrences in user generated playlists. Such similarity measures play a vital role in many application scenarios of Music Information Retrieval (MIR), e.g., in music recommendation and playlist generation applications.

Schedl, 2008 describes music information retrieval (MIR) as the extraction, analysis, and usage of information about any kind of music entity (for example, a song or a music artist) on any representation level (for example, audio signal, symbolic MIDI representation of a piece of music, or name of a music artist).

It’s a quite challenging and repetitive job to calculate and analyze artist similarities as well as make the results visible using data visualization techniques.

Since I’m working on a web-based visualization toolkit, which is dedicated to data analysis and visualization, I just decided to use this specific task of calculating artist similarities as a real-world use-case for the toolkit I’m working on.

The approach described in this post tries to generalize the problem domain and aims to make the calculation, analyzation and visualization of artist similarities a uniform and repeatable process.

Unveil.js

Before I start explaining my approach on the exercise, I want to give you a short introduction on Unveil.js, a data-driven visualization toolkit. It basically provides a simple abstraction layer for visualizations to ease the process of creating re-usable charts. To accomplish this, a data-driven methodology is used.

Data-driven means, that the appearance of the result is determined by the underlying data rather than by user defined plotting options. Visualizations directly access data trough a well defined data interface, a Collection, so there’s no longer a gap between domain data and data used by the visualization engine.

Such visualization can be re-used in terms of putting in arbitrary data in, as long as the data is a valid Collection and satisfies the visualization specification (some visualizations exclusively use numbers as their input, others use dates (e.g. Timeline plots), and so on…).

You can track the project’s progress at Github.

The toolkit’s data layer also provides means to implement arbitrary transformations that operate on raw data. So the challenge was to take input data, a set of user generated playlists, and perform similarity-measurement algorithms on it. The result is a new collection of artists (that occurred in the original data-set) that feature a set of similar artists. This set is based on a similarity score, which is determined by the algorithm.

Data Retrieval

In the first place you are faced with the problem of extracting data from different sources where various methods of information retrieval (MIR) need to be applied. So this task needs to be done mostly by hand and depends on how the data source is organized. The result of this task is usually an intermediate proprietary data format, which is used to perform processing and analysis on the data.

If you would agree on a uniform data exchange format you would be able to generically apply common operations like co-occurrence analysis on it.

Therefore, here’s a proposal of a lightweight Collection format represented as a JSON string that holds the raw data as well as some meta information about the data’s structure.

For my task (analyzation of playlists) I’ve built a simple data aggregation service that pulls user generated playlists from Last.fm and translates them into a uniform collection exchange format.

I’ve used the following strategy to get some suitable playlists:

- Load a set of seed Artists (those relationships you’re finally interested in)

- Collect top fans (users) for all seed artists

- For every user pull its playlists

- The resulting playlists contain only those artists and songs that are listed in the set of seed artists

Using this approach I’m most likely getting playlists that contain artists of the set of seed artists and eliminate the buzz. So once you have this format of data it’s quite easy to operate on it.

Here’s how a resulting collection of playlists looks like:

{

"properties": {

"name": {

"name": "Playlist Name",

"type": "string",

"unique": true

},

"artists": {

"name": "Artists",

"type": "string",

"unique": false

},

"songs": {

"name": "Songs",

"type": "string",

"unique": false

},

"song_count": {

"name": "Song count",

"type": "number",

"unique": false

}

}

"items": {

"4976660": {

"name": "JD_Turk's.Vol.2",

"artists": [

"Beck",

"Michael Jackson",

"Green Day",

"Madonna"

],

"songs": [

"Beck - Think I'm In Love",

"Michael Jackson - Remember the Time",

"Green Day - Last Night On Earth",

"Madonna - Forbidden Love"

],

"song_count": 8

},

"1380177": {

"name": "Random!!!",

"artists": [

"Daft Punk",

"Depeche Mode",

"Moloko",

"Radiohead"

],

"songs": [

"Daft Punk - Something About Us",

"Depeche Mode - Precious",

"Moloko - Cannot Contain This",

"Radiohead - Nude"

],

"song_count": 4

},

}

}

See the full collection here.

You can read more about Collections at the Unveil.js documentation page.

Gaining Similarity Measures

In order to perform similarity measurement on that data, I’ve implemented two different algorithms that calculate a similarity score based on co-occurrences. Those algorithms have been described in detail by Schedl, Knees, 2009.

Below you can see the essential parts of the transformation functions I’ve implemented.

Normalized Co-Occurrences described by Pachet et. al, 2001:

This approach just counts co-occurrences and normalize the result by using a symmetric function.

function coOccurrences(v1, v2) {

var items1 = v1.list('items'),

items2 = v2.list('items');

return items1.intersect(items2).length;

};

function similarity(v1, v2) {

return 0.5* (coOccurrences(v1, v2) / coOccurrences(v1, v1)

+ coOccurrences(v2, v1) / coOccurrences(v2, v2));

};

See the full implementation here.

Weighted co-occurrences on different distances, described by Baccigalupo et. al.:

function coOccurencesAtDistance(v1, v2, d) {

var items1 = v1.list('items'),

items2 = v2.list('items'),

playlists = items1.intersect(items2);

return playlists.select(function(p) {

return checkDistance(p, v1, v2, d);

}).length;

};

function similarity(v1, v2) {

return 1*coOccurencesAtDistance(v1, v2, 0) +

0.8* coOccurencesAtDistance(v1, v2, 1) +

0.64* coOccurencesAtDistance(v1, v2, 2);

};

See the full implementation here

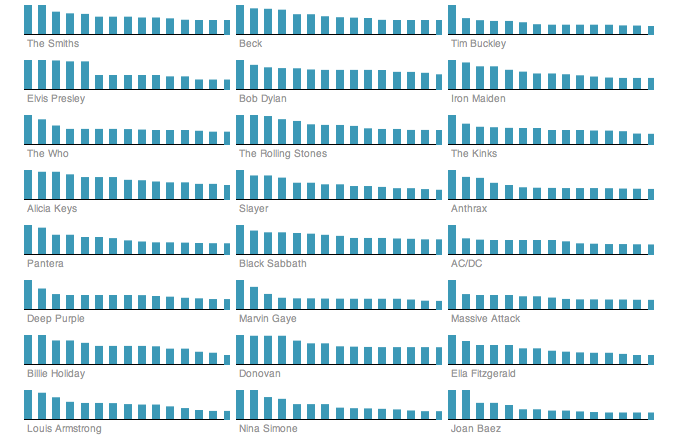

Visualization of the results

In order to visualize the result I decided to implement an interactive visualization that uses a number of small barcharts (showing similar artists for a certain artist).

Visual representation of the result

Conclusio

The algorithms implemented do not claim to be state-of-the art similarity measurement methods. However, the fact, that you can just swap-in any other implementation makes similarity analysis a repeatable process. In case you’re considering using this framework for your own data analysis tasks, just let me know. I’d be glad to get you started.

References:

- Automatically Extracting, Analyzing, and Visualizing Information on Music Artists (M. Schedl, 2008)

- Context-based Music Similarity Estimation (M. Schedl, P. Knees, 2009)

Hi, what about your data model to an ontology based one? You are describing the used properties at the beginning of you serialization, why not outsourcing them into an ontology (or several ontologies of different purpose)? For example (I’ve rewritten here your first playlist):

“ex:Playlist01″: {

“rdf:type”: “pbo:Playlist”,

“dc:title”: “JD_Turk’s.Vol.2″,

“pbo:playlist_slot”: [

"rdf:type": "pbo:PlaylistSlot",

"pbo:playlist_item": "http://dbtune.org/musicbrainz/page/track/69fd2cd2-e207-45ba-920e-1a0f7f52a3a1",

"olo:index": 1,

],

“pbo:playlist_slot”: [

"rdf:type": "pbo:PlaylistSlot",

"pbo:playlist_item": "http://dbtune.org/musicbrainz/page/track/3b13400d-0ea7-4b9e-b696-c3bc830eb2da",

"olo:index": 2,

],

“olo:slot”: [

"rdf:type": "pbo:PlaylistSlot",

"pbo:playlist_item": "http://dbtune.org/musicbrainz/page/track/036005ec-8867-4615-81c9-a5f608a3ec71",

"olo:index": 3,

],

“olo:slot”: [

"rdf:type": "pbo:PlaylistSlot",

"pbo:playlist_item": "http://dbtune.org/musicbrainz/page/track/0488434c-2968-48c2-bd78-378f60a7bf41",

"olo:index": 4,

],

“olo:length”: 8

}

Used Ontologies:

- DC: http://purl.org/dc/elements/1.1/

- Ordered List Ontology: http://purl.org/ontology/olo/orderedlistontology.html (olo = http://purl.org/ontology/olo/core#)

- Play Back Ontology: http://purl.org/ontology/pbo/playbackontology.html (pbo = http://purl.org/ontology/pbo/core#)

The track URIs are only examples, however, they are representing always the music track, you’ve described in the strings.

Cheers,

Bob

Well, you’re basically right. Using ontologies for modeling data is a good thing. However I’m describing a client-side scenario here, which shouldn’t work on top of RDF directly. When working with concrete data, in a local scenario you don’t need globally unique entity-uri’s, you just need local unique keys.

What I mean is, that I see the Collection API as a separate (client-side) layer, that doesn’t need the complexity of RDF (which must work in global scenario). So you can (but don’t have to) use readable local keys that are unique within the collection of data you’re looking at.

This works perfectly well within a Semantic Web scenario.

You can use the Collection API on top of any semantic web infrastructure. E.g. you would query data using a SPARQL endpoint just as usual. What you have to do is to transform the output, so that it fullfils the specification of a uv.Collection. By doing this you’re able to visualize arbitrary data (with certain structure of course) with the same visualization, that sits on top of the Collection API.

I’ve implemented a simple translation service that fetches music artists from Freebase.com (using an MQL query) and translates it into the Collection format. It’s just a few lines of code, thanks to Freebase ACRE. See here: http://acre.freebase.com/#!path=//collections.ma.user.dev/artists

The output that conforms to a uv.Collections looks like this:

http://collections.freebaseapps.com/artist

Well this data is used by the Stacks example http://quasipartikel.at/unveil/examples/stacks.html.

Please use a web-kit based browser (Chrome / Safari), as mousepicking doesn’t work in Firefox yet.

You could easily change the data to be displayed by just providing a different Collection of items.

Well, I think, if you include the model directly (somehow), you won’t need the transformation step. You’ll have (resolvable) URIs and the meaning of the used concepts in the background and can use this knowledge for further browsing and exploration.

There are also RDF/JSON representation formats available and/or in development.

I’m not really familiar with Freebase. However, from your example the artist.ids (e.g. “/en/atari_teenage_riot”) are maybe representing the resolvable part here.

Finally, I don’t really know, whether visualizing ” … arbitrary data [...] with the same visualization …” is always good or should be the goal. We often have to create customized visualizations based on use cases, users’ needs and the grounded domain.

My conclusion is, we need a Semantic Web Ontologies based knowledge representation also on the client side to interact with the linked open data cloud.

This is an interesting dispute. Really! Thanks for bringing it up. I’m not claiming that the proposed model is necessary in a throughout linked-data scenario. It’s just an abstraction, that I found useful to get my tasks done, in cases where there’s no semantically interlinked data available, which (most) often is the case. But even when there is, it’s not wrong to have another layer, imo. I don’t think that (say proprietary) local formats and standards like RDF rule themselves out. If you design those interfaces to be linked-data aware, it’s legitimate to have them. And there’s lots of data that is never meant to be semantically interlinked (e.g. turnover reports within a company). Why not being able to process (visualize) such data as well?

My experience using the standards (RDF) for client apps directly (I’ve tried it), was, that they’re simply too complex. I wanted to map domain data to a simple data model, that is easy to grasp. It’s easier to build client apps, like visualizations, when you don’t need knowledge about the (perhabs verbose) vocabulary an ontology uses.

I really like the approach Freebase takes. It’s a proprietary format, basically. They use JSON rather than XML and they have their own query language MQL (also expressed using JSON). However their graph of entities maps to RDF as well, so they use RDF (along with common ontologies) as an export format. What’s also interesting about Freebase is, that they allow users to contribute to schemas (=ontologies) as well. So Freebase sees the creation of ontologies as a continuous process (rather than having static ones defined by one interest group). I think while their system is still complex under the hood, it’s less verbose, less scientific, and more user-friendly w.r.t. the public interface.

What I mean with re-usable visualizations is not, that there’s one implementation satisfying all needs. But what visualizations definitely need, imo, are uniform interfaces in order to map domain data to different graphical representations. The Collection API is just my attempt to create such an interface.

Hi Michael,

I fully understand your approach - keep it simple and keep the door open

I also like the moderated ontology modeling style of Freebase (more or less the idea behind it). On the other side, as far as I’ve observed their RDF data, they don’t really often re-utilize common ontologies. That’s the crucial point in my mind. I think for a “well-designed” distributed database of linked data, we have to make use of a common stack of well-established, well-designed ontologies (to do at least also the alignment/ mapping on the T-Box side). That’s not really the case in the proprietary ontology of Freebase. For example the concept “fb:base.websites.website.website” makes a reutilization really hard. Furthermore, I currently, can’t really resolve the meaning of this concepts. However, this is maybe a bit off-topic in this discussion here

“they use JSON rather than XML”

Semantic Graphs aren’t XML. RDF/XML is simply a representation format for them, as also RDF/JSON and RDF/Turtle (which is also as easy to read as JSON) are other ones. However, people often believe that especially RDF graphs are always RDF/XML. This is a huge mistake of the past, as the initially propagate RDF/XML as RDF. I prefer N3 and its subset Turtle

“So Freebase sees the creation of ontologies as a continuous process”

Ontology modeling is always a continuous process, which should be moderated by some domain experts of the domain that is address by this ontology.

“it’s less verbose, less scientific, and more user-friendly”

Yes, this is a general problem of Semantic Web technologies, in my mind. Although, I believe, that this technology stack will be counted among computer science basics in the near future. Hence, every web developer can easily handle them. However, today I observe often a gap between Semantic Web developers and Web developers. If one keeps in mind that the Semantic Web belongs to the Web, that means, there is only one Web, then one maybe realizes that we probably have to go this way.

I don’t want to consume data/knowledge/information from a single information service with a proprietary API. I want to consume data/knowledge/information from every resolvable URI in a standardized way. That means, if I like to retrieve a HTML representation of an information resource, I should get it, as it should be also the case, if I like to retrieve a RDF/Turtle representation of the same information resource.

Re. your visualization approach, are you planning to close the cycle. That means, would you like to include links of the information resources, where you’ve retrieved the information from (only if it’s possible)?

Closing the data/knowledge/information cycle would become also an important part in the future. That means, we shouldn’t only be able to retrieve arbitrary information resources from somewhere, rather than we should also be able to contribute data/knowledge/information to this information service, where we’ve retrieved them from.

Buy:Seroquel.Aricept.Advair.Female Pink Viagra.Cozaar.Nymphomax.Ventolin.Zocor.Acomplia.Benicar.Buspar.SleepWell.Lipitor.Lipothin.Prozac.Zetia.Female Cialis.Wellbutrin SR.Lasix.Amoxicillin….

Buy:Lumigan.Actos.Prednisolone.Zovirax.Mega Hoodia.Human Growth Hormone.Petcam (Metacam) Oral Suspension.Valtrex.Accutane.Arimidex.100% Pure Okinawan Coral Calcium.Prevacid.Zyban.Nexium.Synthroid.Retin-A….

Buy:Actos.Valtrex.Zovirax.Zyban.Petcam (Metacam) Oral Suspension.100% Pure Okinawan Coral Calcium.Mega Hoodia.Prednisolone.Prevacid.Nexium.Lumigan.Retin-A.Human Growth Hormone.Accutane.Arimidex.Synthroid….